In the rapidly evolving world of high-performance computing, innovations such as a 28nm 64-kb 31.6-tflops/w digital-domain floating-point computing unit and related technologies are setting new standards.

This cutting-edge computing unit, built on a 28nm process with a 64-kb on-chip memory (or cache) and an impressive energy efficiency of 31.6 teraflops per watt, exemplifies the strides being made toward achieving maximum computational throughput while maintaining minimal power consumption. In this blog, we’ll break down its architecture, key features, real-world applications, and the future potential of such designs.

Technical Overview

What Is a Digital-Domain Floating-Point Computing Unit?

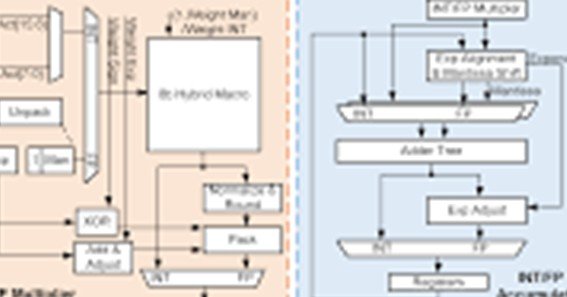

A digital-domain floating-point computing unit (FPU) is a specialized processor component designed to handle floating-point arithmetic operations efficiently. These units are crucial for applications that require extensive mathematical computations, such as scientific simulations, machine learning algorithms, and graphics processing.

Key Specifications

- Process Node (28nm):

The 28nm technology node represents a mature yet robust semiconductor process. It strikes a balance between performance, cost, and power consumption, making it suitable for high-performance applications while still maintaining affordability. - Memory Capacity (64-kb):

The inclusion of 64 kilobytes of on-chip memory or cache plays a critical role in reducing latency and improving the throughput of the FPU. This memory helps store intermediate computational results and accelerates access to frequently used data. - Energy Efficiency (31.6-tflops/w):

With an impressive efficiency of 31.6 teraflops per watt, this computing unit is engineered to deliver massive computational power with minimal energy usage. Such efficiency is particularly significant in data centers and high-performance computing clusters where power consumption is a critical concern.

Applications and Industry Impact

The design and performance of this digital-domain floating-point computing unit have far-reaching implications in several sectors:

- High-Performance Computing (HPC):

In supercomputers and HPC clusters, units with such efficiency can significantly reduce energy costs while performing complex scientific calculations, weather forecasting, and simulations. - Artificial Intelligence and Machine Learning:

The ability to perform vast numbers of floating-point operations per watt makes these FPUs ideal for training and inference in deep learning applications, where speed and energy efficiency directly impact performance. - Graphics and Gaming:

Modern graphics processing and real-time rendering benefit from high-performance floating-point computations, providing smoother graphics and enhanced visual experiences. - Embedded Systems:

In devices that require high computational power without compromising on energy efficiency, such as autonomous vehicles or IoT devices, these FPUs can offer both performance and sustainability.

Challenges and Future Directions

While the benefits are clear, several challenges and areas for further research remain:

- Thermal Management:

As computational density increases, managing heat dissipation becomes critical. Advanced cooling solutions and thermal design optimizations will be essential. - Scaling Beyond 28nm:

Although the 28nm process provides an excellent balance of cost and performance, the semiconductor industry is rapidly moving toward smaller nodes (e.g., 7nm, 5nm) for even greater efficiency and performance. - Integration with Heterogeneous Systems:

Future systems will likely integrate these FPUs with other specialized processors (like GPUs and AI accelerators) in a heterogeneous computing environment, necessitating new programming models and optimization techniques.

Conclusion

In summary, a 28nm 64-kb 31.6-tflops/w digital-domain floating-point computing unit and its advanced design features are paving the way for a new era in high-performance and energy-efficient computing.

By leveraging mature semiconductor processes and optimizing for both speed and power consumption, these FPUs hold great promise for applications ranging from scientific research to next-generation AI. As technology continues to evolve, staying informed about these innovations is key to harnessing their full potential.

FAQs

-

What does the “28nm” specification refer to?

The “28nm” refers to the semiconductor process node used to fabricate the computing unit. This mature technology node provides a balance between performance, cost, and power efficiency.

-

How does the 64-kb memory benefit the computing unit?

The 64-kb on-chip memory (or cache) helps reduce latency by storing frequently accessed data and intermediate computational results, thereby boosting the overall processing speed and efficiency.

-

What is meant by 31.6-tflops/w energy efficiency?

This metric indicates that the computing unit can deliver 31.6 teraflops (trillions of floating-point operations per second) for every watt of power consumed, making it exceptionally efficient for high-performance tasks.

-

In which industries can this FPU be most effectively utilized?

Such high-performance FPUs are ideal for sectors like high-performance computing, artificial intelligence, machine learning, graphics processing, and embedded systems in autonomous devices.

-

What challenges need to be addressed for future improvements?

Key challenges include managing thermal output due to high computational density, transitioning to smaller and more efficient process nodes, and integrating these FPUs into heterogeneous computing environments for broader application.